Hello everyone! The previous two weeks have been an emotional and professional roller-coaster for me. It was tough saying goodbye to Etienne, who was such a lovely mentor for almost five weeks, but there was also the joy of welcoming Stanislav (our new mentor!), my parents from India visited me and then they left, I participated in my first ever July-Fourth-parade (my first year in the US, remember?) dressed as gigantic brain, and of course my project had its own ups and downs, which I shall explain in detail below.

Hello everyone! The previous two weeks have been an emotional and professional roller-coaster for me. It was tough saying goodbye to Etienne, who was such a lovely mentor for almost five weeks, but there was also the joy of welcoming Stanislav (our new mentor!), my parents from India visited me and then they left, I participated in my first ever July-Fourth-parade (my first year in the US, remember?) dressed as gigantic brain, and of course my project had its own ups and downs, which I shall explain in detail below.

As I mentioned in my last blog post, I was finally successful in both finding the mu rhythms and detecting their suppression when there was hand movement. The tricky part was seeing the suppression when a subject is asked to imagine hand movement. It’s tricky because one needs to focus all of their thoughts on moving their hand and absolutely block out any other thoughts. It’s hard to not think about moving when asked not to think. Sounds freaky I know, but every time I ask the subject to relax and not think about movement, they seemed to think more about it. Very few candidates did it pretty well, and I believe with a little bit of training everyone can. And hence, in search of these candidates, I spent most of my time collecting data from a lot of people.

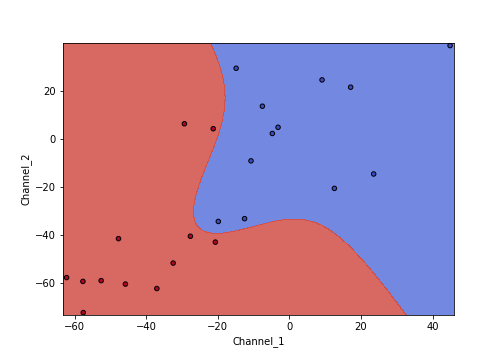

Simultaneously, I invested a lot of time in brainstorming about what the next step should be. The main goal is to classify when a person was thinking about movement, and this classification with a machine learning approach needed some features; features of EEG recordings that are specific to when a person is thinking about movement and when the person is relaxed. Currently, I am trying my luck with the percentage values of power suppression, i.e the difference in the power of the mu-rhythm (8-14 Hz) band when relaxed, versus the power during motor imagery. Theoretically, the power during motor imagery should be much lower. And thus a bigger difference. This works on those candidates who are able to successfully focus their thoughts on only hand movement and have absolutely no thoughts of movement when asked not to. Here’s a plot of these features and the decision boundary that my classifier made :

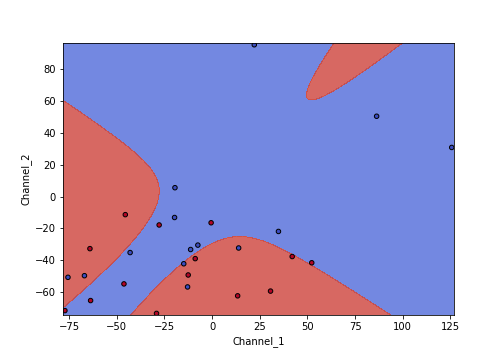

I used a Support Vector Machine to classify from my testing data and it successfully made a decision boundary that separates movement versus non-movement. However, this was not possible in all the candidates, as shown in another example below:

As you can see, there are a bunch of mis-classified states (red markers in blue area and vice versa).

My next steps are to implement a real-time detection system for all those subjects on whom I can classify with a decent accuracy and simultaneously make changes to my data collection protocol for those subjects where the distinction isn’t clear.

With just two weeks to go, there seems to be a lot of work to be done in a short span. But hopefully I will get it done! Fingers crossed! Lastly, one notable change that has occured in the lab is everyone is hooked on to ‘Teenage Dirtbag’ by Wheatus thanks to Greg humming it every day for the past two weeks!