G’day mate, how are ya?

Thank you for waiting for the updates on my research on Human EEG Decoding (aka Mind Reading).

Since my last post, I have tried to decode human EEG data in real time and… guess what…I succeeded! Hooray!

I first analyzed all the data I have collected so far to verify and evaluate the different patterns of brain signals on different images.

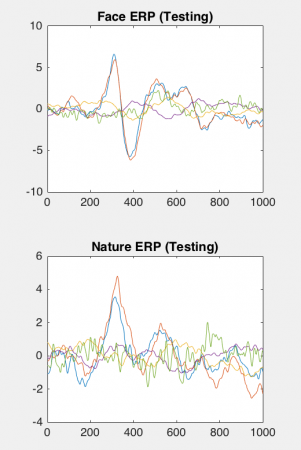

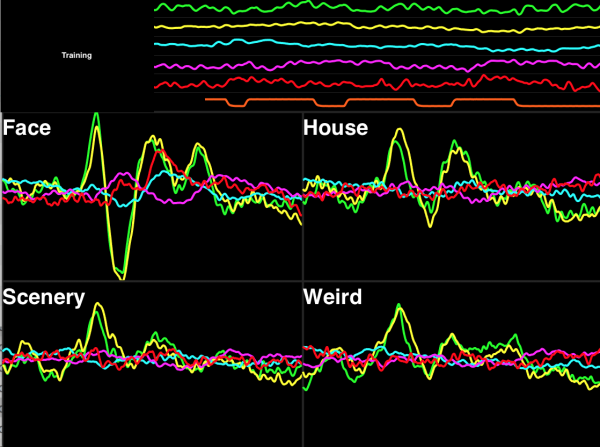

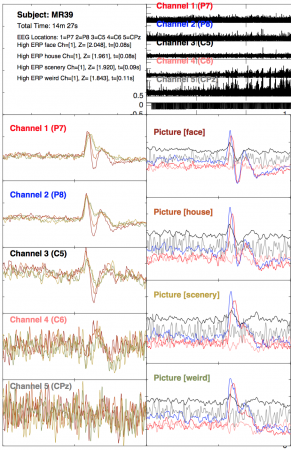

I analyzed the raw EEG signals, and ERPs (event related potentials) in each channel and also in each category of images. I could definitely notice the N170 neuron specifically firing when the face was presented, while it stayed mostly quiet during the presentation of other images. However, there was no significant difference between any of the other images (house, scenery, weird pictures) which indicated that, at least with my current experimental design, I should begin focusing on classifying face vs non face events.

Then I wrote MATLAB code which collects the data from human subjects and decodes their brain signals in real time. In the training session, 240 randomized images (60 images for each 4 categories) are presented to subjects to train the support vector machine (one of machine learning techniques that are useful for classifying objects into different categories). Then, during the testing session, we analyze the EEG responses from each randomized image in real time to correctly predict what image is actually presented. Since it was real time classification, the coding was complicated, and I also had to make it synchronize with my python image presentation program…

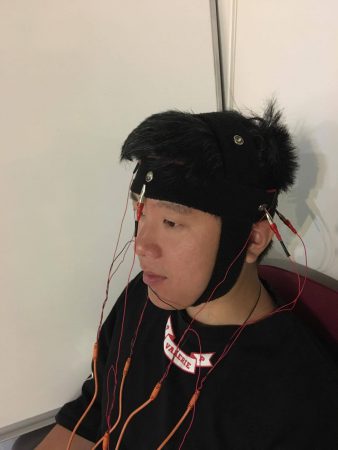

Also, I had to check the hardware and outside environment which could deteriorate the performance of the spikershields and the classifier! Some blood, sweat, tears, and a lot of electrode gel later… I had it running!

After all the hard work, I began running trials where the goal was to classify face vs scenery. I chose natural landscapes as they seem to be the most non face type of images. After a training session of 240 images for each subject, we tested 50 faces and 50 scenery images (order randomized) to check our real time algorithms.

The result was very satisfying! Christy and Spencer (the coolest BYB research fellows – please see my previous blog post) scored averages of 93% and 85% accuracy rate in 5 trials, which proved that we can classify the brain signals from face vs non face presentations successfully. The brain signals were so distinct that we could see specific stimulus distinction from ERPs in training and testing sessions, but they were not strong enough to observe in the raw EEG signals from each channel. However, just because they weren’t obvious to the human eye… doesn’t mean the computer couldn’t decode them! The machine learning algorithms I prepared did an excellent job classifying the raw EEG signals in real time, which suggests that a future in which we can begin working on more advanced, real-time EEG processing is not far away! We’re edging closer and closer to revolutionary bio-tech advancements. But for now, it’s just faces and trees.

And now, the capstone of my summer research!

We fellows worked long hours for 6 straight days filming short videos for TED, each of which focused on one of our individual projects!

It was stressful but exciting. I never would have expected I’d have the opportunity to present my research to the world through TED!

My best subject Christy generously agreed to be a subject for my video shoot.

We presented three experiments:

- EEG recording and ERPs

- Real Time decoding with no training trials

- Real Time decoding with training trials

The first experiment was to show how we can detect differences between face vs other images via ERPs by the presence of N170 spikes. The second experiment was to demonstrate the difficulty of real time decoding… and the third experiment was to show how we can really decode human brain in real time with limited information and few observable channels.

All the experiments were successful, thanks to Greg, TED staff, and Christy!

For the videos, I had to explain what was going on with the experiment and what is implied by the results of each experiment. In preparation for those “chat” segments,, I needed to study how to best explain and decompose the research for the public, so that they may understand and replicate the experiment. The educational format was definitely a good experience to prepare and present my research to different audiences.

Please check out my TED video when it is released someday! You’ll probably be able to see it here on the BYB website when it launches!

To wrap up, I’ve enjoyed my research these past 11 weeks. Looking back what I have done so far during the summer, I see how far I’ve come. This fellowship was a valuable experience to improve my software engineering and coding skills across different programming languages and platforms. I also got a crash course in hardware design and electrical engineering! I learned how to design a new experiment from scratch using many different scientific tools. Most importantly, I learned more about the scientific mindset, how to think critically about a project, how to analyze data, and how to avoid unsubstantiated claims or biases.

Even though mind-reading was my project, I couldn’t have gone it alone I would like to say thank you for everyone in BYB who supported my project, including Greg, who continues to guide me in the scientific mindset, teach me how to conduct experiments, and helps me to analyze data and present the research effectively to outsiders. Thank you to Stanislav, who put forth a lot of effort to help me verify and build my software. To Zach who helped me build and test the hardware, to Will who was always there to help me out for any matters I had during my time here, and to Christy and Spencer who were the best subjects always sparing their time for the sake of science. I am sure that my experience here was a step forward to becoming a better researcher. My project is not finished, it has really just begun. I am planning to continue researching this mind-reading project. One channel decoding and classification of non face images will be the first step after this summer.

Thank you so much for your time and interest in my project. Stay tuned….