—Written by Ariyana Miri—

Welcome back! If you’ve been following along with my FOMO glasses journey, then you know I’m trying to build a pair of glasses that capture a photo every time you blink. In my last post, we discussed the idea behind my project, and the implications the success of the project can have! So check it out here if you haven’t had the chance yet!

The next steps in my project include implementing the AI model, and figuring out the best way to classify eye blinks in real time. Then, the hardware of how to actually build the device. However, in order to do that, we first need to understand the data.

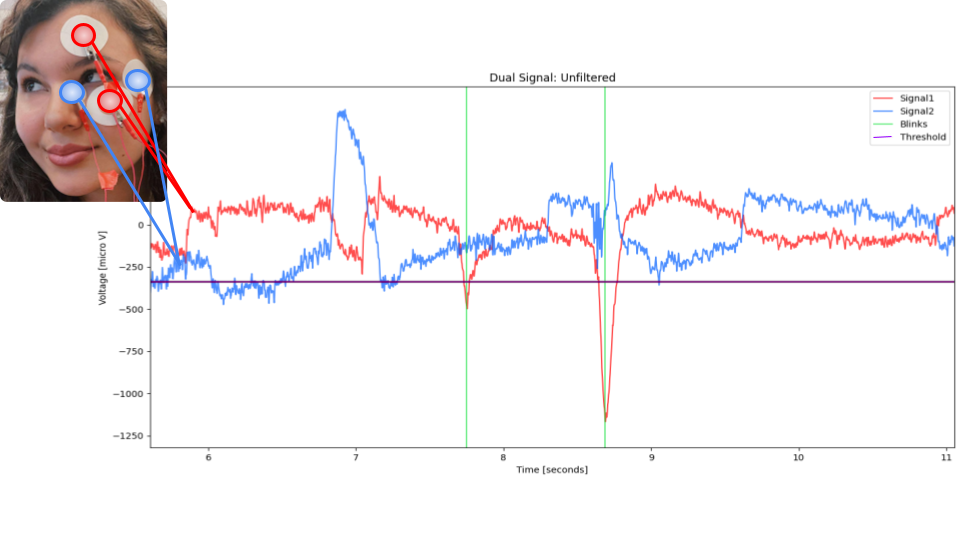

The image above was taken from a dataset I took on myself, where I placed four electrodes surrounding one of my eyes, and filmed myself doing normal things like; talking, eating, working, watching TV… It’s important to have a natural dataset, because your unconscious blinks are much lighter and more subtle than when consciously blinking for a dataset.

I then go back through the video, and mark the data everytime I blink with those green lines. The red and blue colors correspond to the two signals taken by the different pairs of electrodes. You always need two electrodes in order to record a signal within the distance between them. I decided to use two signals instead of one so that the AI model has more information to go one when identifying a unique signature for eye blinks.

So what are we measuring exactly? Well, your body produces electricity every time you move, and each movement has a unique signature. The nice thing about my project is that blinks have a very distinct signature. Even without AI, a person is easily able to point out the shape of the signal we’re looking for.

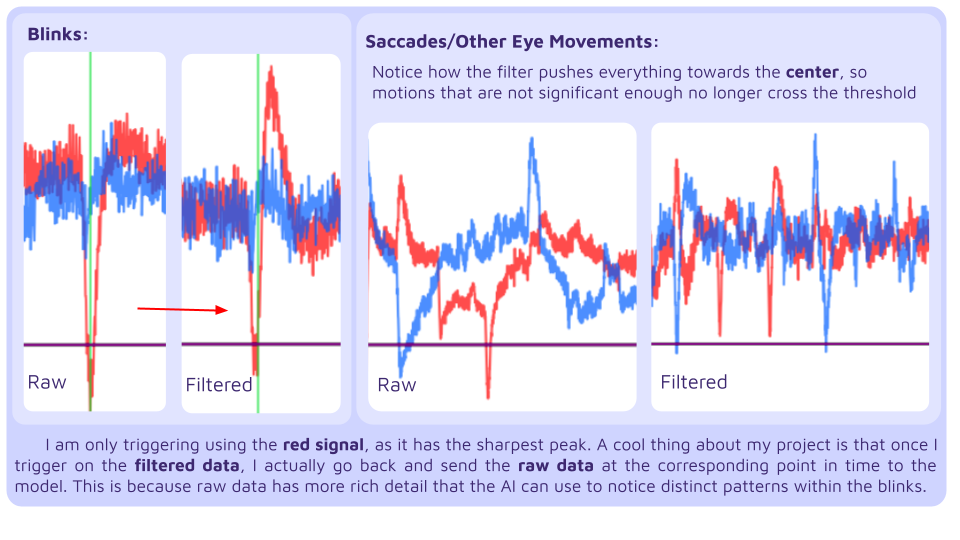

However, there are still other eye movements we have to weed out in order to isolate the blinks. This is why we use filtering! After testing several filters and parameters, I decided on the high pass filter seen below to process the data.

As you can see, the highpass filter pushes the low frequency parts of the signal to the center, while allowing the higher frequency peaks to jump out. This is what we want, as low frequency movements are usually indicative of non-blink movements.

Now, how do we use this data to classify using AI? Do we just have the AI constantly classify all the data coming through? For my project, I chose the very popular method of using a threshold (the purple line in the graphs) to decide when the model should classify. Once this threshold is crossed, the model will take a set number of milliseconds before and after the point of crossing and send that window of data to the model.

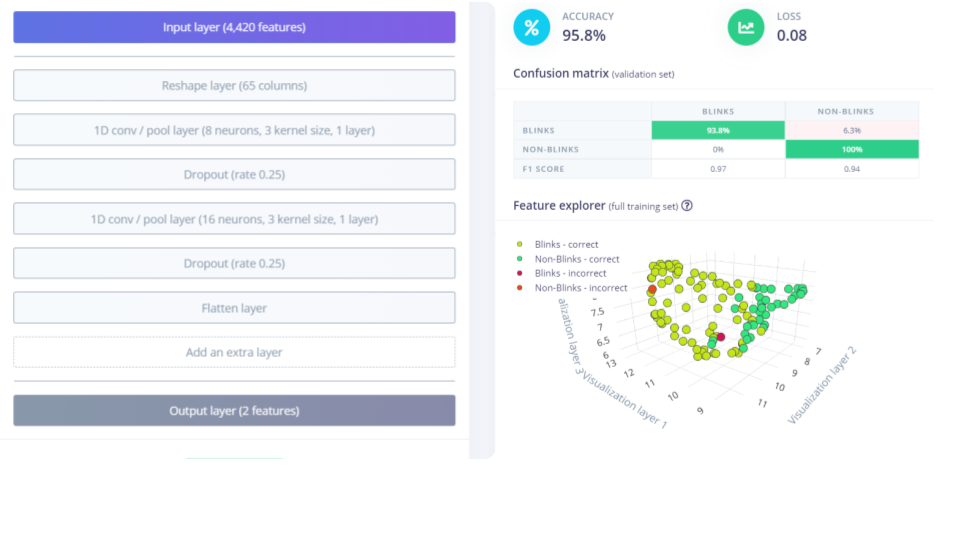

I have already trained a model to work with, with a current accuracy of 94% (this is still from a limited dataset and it is likely to change as the project advances).

Now that I have this process fleshed out, I need to transfer it to the hardware so it can run by itself. This is where I am currently stuck.

When you’re dealing with hardware, there are pre-set filters, maximum sampling rates and other limitations that can slow the process, especially when dealing with Tiny ML. We want to do the most data processing we can while using the least amount of time and space. These are some of the issues I am facing as I am moving forward with my project.

With my project specifically, we want to be as fast as possible in capturing an image, to make sure we are getting the exact moment a person’s eyes are closed. My current model takes 80ms to run, so a solution I am thinking of implementing is taking a photo EVERY time the trigger is activated, and having the model delete it if the classification comes back negative. This way, we don’t miss a thing!

When I’m not working on debugging hardware issues, I’m working on the physical model of the glasses. I started this part last week, and have been having loads of fun. The image at the beginning of this post is the base I am currently working on, though it will soon be decked out in circuits, wires, electrodes, amplifiers…

One realization I’ve come to while working on this project is how quickly time flies when doing research. Though I’ve made good progress thus far, I thought I’d be farther along by now (tale as old as time). I have started to consider where I need to make sacrifices in order to get a finished prototype working in the weeks I have left.

One area I believe I’ll have to cut is using dry electrodes. I believe the design in general will be more of a fun, techie, steampunk looking piece rather than the more inconspicuous design I was hoping for. I also believe I won’t have much time for post image processing to ensure maximum quality of the output. That will now be something to consider in a later iteration of this project past the initial prototype.

Right now, my focus is on making the prototype work, and I’m excited to see it come out the other side!