—Written by Fredi Mino—

Fancy having a robot hand that mimics your real hand’s gestures?

Me too! That’s why I’m designing one with another helping hand – from our good old friend, TinyML.

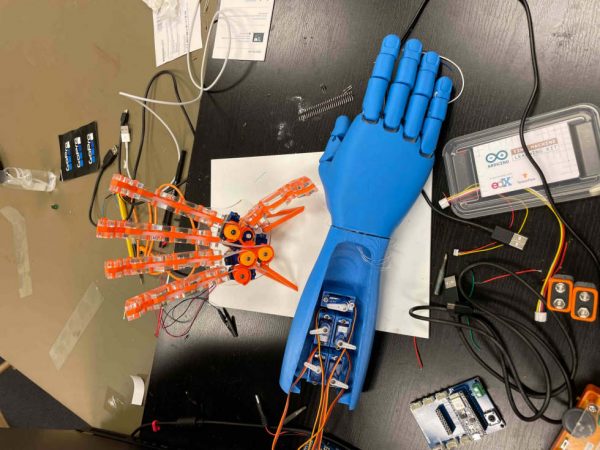

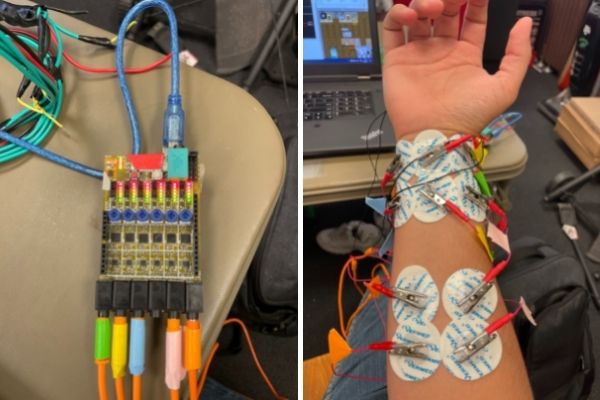

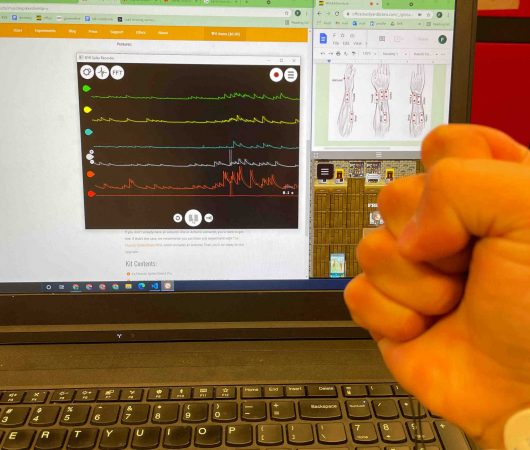

I will do this by measuring the activity of the muscles involved in hand movements using surface Electromyography (sEMG). Then, we will use digital signal processing (DSP) algorithms to transform those signals into vectors that a neural network (NN) can understand and translate to commands for the motors controlling the finger in the robotic hand.

Easy enough, right? Well…

If we go beyond the initial “wow” factor of the project, you might find yourself asking ‘Why is this important? It sure sounds cool, but a big blue hand doesn’t look like science to me.’

This is because, as amazing as that would be, my project is not really about designing neural interfaces. I am trying to investigate a problem that goes one step up in the abstraction ladder: the problem of translational research in artificial intelligence (AI) and machine learning (ML).

AI and neural networks have been swarming the news headlines for almost a decade now. From IBM Watson to GPT-3 and DALL-E it seems like there’s almost no problem that these algorithms can’t solve. So, why is it then that these seemingly omnipotent black boxes haven’t left the lab settings in which they were created?

As impressive as they are, neural networks cannot escape one of the fundamental concepts of computer science known as GIGO (Garbage In Garbage Out). The quality of the data you use for your model matters a lot. The models and results that you see in the news rely on curated datasets and structures that have been carefully crafted and reviewed by scientists for that particular study.

However, unlike a controlled lab setting, data in the real world is full of inconsistencies and noise. This is why although every year, there seems to be more and more papers with impressive demonstrations, particularly in the domain of neural engineering, these models have not left Academia.

I’m a designer at heart. To me, the idea that we can understand a phenomenon well enough to express in the language of math and computer science has a powerful feel to it. It is almost magical. Given enough understanding of a problem, we can formulate models (a.k.a algorithms or programs) that can actually interact with and modify physical systems.

However, if we want to control such systems, our program must not be easily affected by noise; in other words it must be robust. We cannot program a robust neural network unless we train it with the same data that it will be exposed to in real life. This is what makes this summer project stand out among the sea of research on ANNs. We will actually test the model in real-time and assess how good it is by controlling a physical system (in this case a robot hand).

With all that in mind, let’s rephrase what my project is actually about.

We have known for decades that EMG signals contain enough information to prove useful in control applications. We have seen the AI models solve the most difficult challenges, and it doesn’t seem like they are stopping any time soon.

What we don’t know yet, however, is if this technology has matured enough that it can actually be used in real-life applications by the average engineer/tinker/customer/teacher/student. This is the question we are asking. Edge Impulse and TinyML not only make this promise, but go beyond in abstracting away all the unnecessary coding barriers that are present in the field, effectively democratizing this technology. The success of mine and my fellows’ projects would be a living proof of concept that AI is finally here, and you my dear reader have never been more welcome to try it out!

One of the most powerful concepts I’ve learned in engineering and computer science is the idea of using Abstraction as a tool. That is, the notion that you can divide a complex system into simpler subsystems and interfaces.

So in this case, I am offering you an interface. A robotic hand is a cool system for our NN to control, but it doesn’t have to be a hand! 5 motors are equivalent to 5 degrees of freedom (DOFs). And there are a lot of systems that have 5 DOFs (e.g. robot arms, RC cars, drones, etc..). Additionally, you are not limited to using servo motors either. I, if you have tinkered enough with electronics, you know that you can easily scale up to industrial motors. And to bring up the bar even higher, you don’t even need to control a physical system. You could easily use your neural interface to control any software program (maybe give yourself some virtual hands!).

So join me in this adventure. And while we are at it, why not build a robot hand or two just to make it fun?

About Me

I am an international student from Quito, Ecuador (South America) who recently graduated from UNC Asheville (NC). My major is Mechatronics Engineering with minors in Neuroscience, Computer Science and Mathematics. I am a life-long learner, which is why I spend a lot of my free time tinkering with electronics and learning new programming languages. When I’m not doing so, you can usually catch me watching Sci-Fi/Superhero movies, and occasionally, playing some good ol’ RPGs.