-

FellowshipHello! We are inching towards our goal of giving you a superpower! Last time, I was trying to find the mu rhythms, and I strongly think I may have found them. This consisted of two main steps. One was to find the right montage (electrode locations) which will help us see the mu rhythms. And […]

FellowshipHello! We are inching towards our goal of giving you a superpower! Last time, I was trying to find the mu rhythms, and I strongly think I may have found them. This consisted of two main steps. One was to find the right montage (electrode locations) which will help us see the mu rhythms. And […] -

FellowshipIs It Actually My Choice To Not Title This Post? Looking doubtful. Since last I wrote about the “Free Will” project, I have increased the volume of data I have to work with and I have organized it into an intuitive MATLAB database for efficient manipulation via a set of functions for monte carlo analysis, spectrogram generation, […]

FellowshipIs It Actually My Choice To Not Title This Post? Looking doubtful. Since last I wrote about the “Free Will” project, I have increased the volume of data I have to work with and I have organized it into an intuitive MATLAB database for efficient manipulation via a set of functions for monte carlo analysis, spectrogram generation, […] -

FellowshipIn my last post I claimed my tunnel was done and ready for testing, but boy was I wrong! I’ve spent the last week or so adding supports, finding a way to cover it to prevent the bees from escaping, covering the surroundings to eliminate landmarks (anything in the environment that lets bees keep track […]

FellowshipIn my last post I claimed my tunnel was done and ready for testing, but boy was I wrong! I’ve spent the last week or so adding supports, finding a way to cover it to prevent the bees from escaping, covering the surroundings to eliminate landmarks (anything in the environment that lets bees keep track […] -

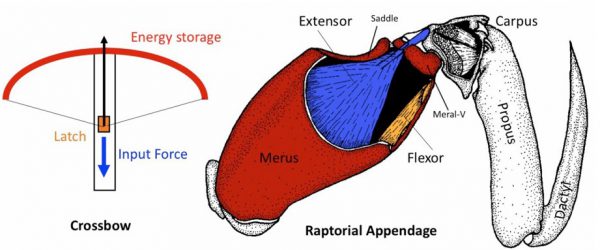

FellowshipI want you to do me a favor. I want you to hit me as hard as you can. What’s that? You want more background? Folks, things have started to pick up. Perhaps the most important development since June 11th has been the christening of our two mantis shrimp, which will give me an excuse to talk […]

FellowshipI want you to do me a favor. I want you to hit me as hard as you can. What’s that? You want more background? Folks, things have started to pick up. Perhaps the most important development since June 11th has been the christening of our two mantis shrimp, which will give me an excuse to talk […] -

FellowshipHey everybody, only two weeks have passed but I have so much to update you on. Earlier this month I was at CRISPRcon in the lovely city of Boston (pictured above), learning about the hope and fears presented by emerging CRISPR technologies, and this week our Neurorobot has sprouted legs! Or well… Wheels. This new version of […]

FellowshipHey everybody, only two weeks have passed but I have so much to update you on. Earlier this month I was at CRISPRcon in the lovely city of Boston (pictured above), learning about the hope and fears presented by emerging CRISPR technologies, and this week our Neurorobot has sprouted legs! Or well… Wheels. This new version of […] -

FellowshipHey guys, it’s Yifan again. There has been a lot of progress since my first blog post. As promised last time, I was able to finish a functional prototype with all the legacies left for me. I put the device in the woods to get recording for the first time. The results, to my pleasant […]

FellowshipHey guys, it’s Yifan again. There has been a lot of progress since my first blog post. As promised last time, I was able to finish a functional prototype with all the legacies left for me. I put the device in the woods to get recording for the first time. The results, to my pleasant […] -

FellowshipFresh, organic, locally sourced meditation researchLast Friday, Backyard Brains once again opened our doors (even wider–they’re always open during business hours!) to our fellow and aspiring citizen scientists as a part of this year’s Ann Arbor Tech Trek! Dozens of local tech companies had their doors open to the public that evening and we, like […]

FellowshipFresh, organic, locally sourced meditation researchLast Friday, Backyard Brains once again opened our doors (even wider–they’re always open during business hours!) to our fellow and aspiring citizen scientists as a part of this year’s Ann Arbor Tech Trek! Dozens of local tech companies had their doors open to the public that evening and we, like […] -

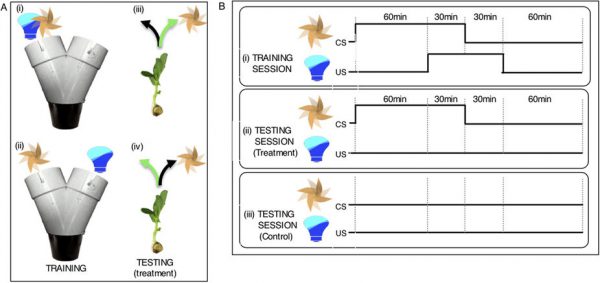

FellowshipMy project, if you remember (see previous post), is to train plants to associate fans with light and hopefully get them to grow in the direction of a fan after they’ve been trained. Radiolab has also done an awesome podcast on this. How hard can it be to set 48 of these up? I’ll split it into […]

FellowshipMy project, if you remember (see previous post), is to train plants to associate fans with light and hopefully get them to grow in the direction of a fan after they’ve been trained. Radiolab has also done an awesome podcast on this. How hard can it be to set 48 of these up? I’ll split it into […] -

FellowshipHello, everyone! Jess here. Lots of exciting things have happened in the last two weeks. First, I have begun to raise a group of silkworms into moths (#mothmom). This involves feeding them Mulberry leaves from my backyard each day, keeping everything extremely clean and crossing my fingers in hopes that I know what I’m doing. […]

FellowshipHello, everyone! Jess here. Lots of exciting things have happened in the last two weeks. First, I have begun to raise a group of silkworms into moths (#mothmom). This involves feeding them Mulberry leaves from my backyard each day, keeping everything extremely clean and crossing my fingers in hopes that I know what I’m doing. […] -

EducationHello all! My name is Anastasiya and I’m a computer engineering and neuroscience double major at the University of Cincinnati. I’m curious about the world around me and my favorite thing to do is learn. My hobbies include making strange noises, fangirling over the fuel efficiency of my car, and volunteering while spreading knowledge to […]

EducationHello all! My name is Anastasiya and I’m a computer engineering and neuroscience double major at the University of Cincinnati. I’m curious about the world around me and my favorite thing to do is learn. My hobbies include making strange noises, fangirling over the fuel efficiency of my car, and volunteering while spreading knowledge to […] -

FellowshipWithout superpowers or a power drill there are only a couple ways we can observe brain activity and most of them require large expensive equipment: Luckily, with the help of Backyard Brains, you can make the equipment yourself! This summer, I’m going to be using such DIY equipment to do some electroencephalography (or EEG) experiments to study […]

FellowshipWithout superpowers or a power drill there are only a couple ways we can observe brain activity and most of them require large expensive equipment: Luckily, with the help of Backyard Brains, you can make the equipment yourself! This summer, I’m going to be using such DIY equipment to do some electroencephalography (or EEG) experiments to study […] -

FellowshipHi everyone! I’m Molly, a senior Biology major at the Georgia Institute of Technology. Since my school’s mascot is a Yellow Jacket, I guess it’s very on-brand for me to be working with a similar insect, honey bees! But ironically, I’ve always thought I was allergic to bees. I had a reaction to a sting […]

FellowshipHi everyone! I’m Molly, a senior Biology major at the Georgia Institute of Technology. Since my school’s mascot is a Yellow Jacket, I guess it’s very on-brand for me to be working with a similar insect, honey bees! But ironically, I’ve always thought I was allergic to bees. I had a reaction to a sting […]