Hello again my faithful viewers, and thanks for tuning in for another exciting octopus themed blog post. As always I am your host Ilya Chugunov, but today I’ve come with sad news; all good things must come to an end, and this marks the end of my summer research here with Backyard Brains. Now’s a time to grab a hot cocoa and reminisce on what we’ve learned and talk about what there’s still left to do.

First and foremost, if you haven’t already had the chance to look at my previous blog posts, you can see them here:

Studying Aggressive Behavior in Octopodes

Now let’s recap and break this down into some conversational dialogue.

First, we found out, rather accidentally, that if left together our Bimacs will wrestle each other to assert dominance. This gave us the idea of using computer vision to gather data for analysis with the hope that we could identify some interesting features within their behaviors.

First I built my acrylic setup to record the octopuses doing their thing, making sure to have even lighting and a stable setup for my Go Pro so that the code didn’t just explode from all the variability.

The first, and most classic, behavior found in our trials with the Bimacs were “bouts”, which were little sumo-wrestling fights where each octopus tried to push the other around as far as possible; these were common when both the octopuses were excited and lasted about 5 seconds each.

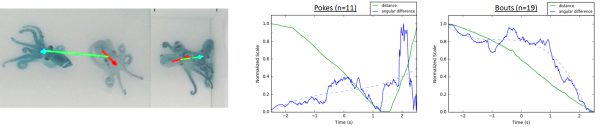

The second curious behavior found was the “poke”, where one octopus wanted to provoke a real fight, but the other just wasn’t feeling it. The more excited octopus would waltz up to the lazy-bones and just briefly tap him with an arm before jetting off across the chamber.

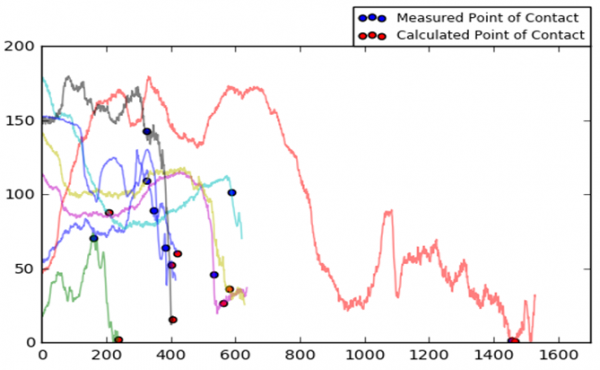

I noticed that in both the bouts and the pokes, right as the distance between the two octopuses closed, and they made contact, the angle between them rapidly decreased too. They would approach each other sideways (almost backwards at times), then rapidly spin around right as they got close to poke/fight. In the poke behavior, the offending octopus would then spin back around and jet off, while in the bout behavior they’d just stay locked face to face.

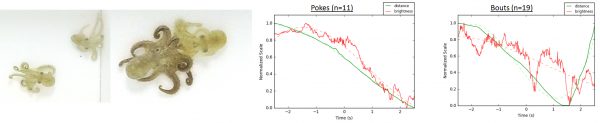

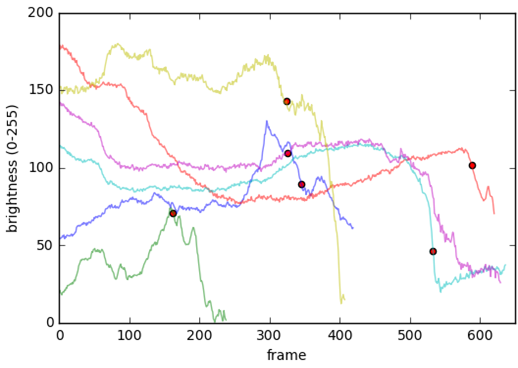

Another notable thing our octopus do in their fighting ritual is change colors. As I assume you already know, these guys are covered in chromatophores and seem to flash bright black as they go on the offensive (Can you tell who the attacker is in that picture above?).

The poke behaviour elicited the same response, twice! The first bump was the attacked octopus darkening as the poking octopus approached it and second was the poking octopus turning a dark brown as he squirted away.

“But Ilya, how in the world do you process so much video? And how do you know when the fight starts in the first place?”

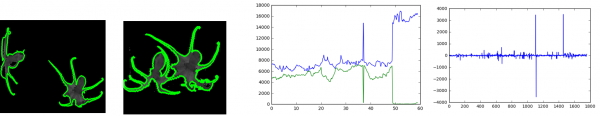

Why thanks for the question, hypothetical reader. I use a mess of MOG (Mixture of Gaussians) background subtraction, erosion, and band-pass filtering combined with the OpenCV convex hull functions to find the general outlines of the octopus, and then I check if they’re two separate blobs, or one combined megaoctopus. If they’re 2 blobs, they’re not in contact, and vice versa, so now it’s easy to define first contact and a bout vs a poke (long contact, short contact).

Using a simple windowing function and a pretty boring logistic regression, we can take a bunch of our video clips of octopus fights where we’ve already classified when a fight occurs, and from them predict a point of contact in a new video we feed into the algorithm. This is where the concept of machine learning starts to play into the project, letting a program learn from previous octopus video to predict what will happen in new octopus video.

I’ve compiled my research results and created a poster which I presented at a University of Michigan symposium.

What’s next?

For me, Canada. Heading up to Montreal next week.

In general, my code is up on my GitHub and is completely open source, so anyone is welcome to make changes to it, take it in whatever directions they want; you don’t even have to use it on octopus if you don’t want.

Now for some musings…

I think there’s a lot still to be done with computer vision and behavioral analysis, and this summer research was just me dipping my foot into the pool. There is much more data we can draw from the same video I was working with, tentacle position and length, how curled the octopus’s arms were, maybe even their heartrate could be extrapolated with enough clever coding. As I continue onward in whatever field of STEM I find myself in next, I hope to keep throwing computational power at problems that don’t seem like they even need a computer, because who knows, maybe they do.

I’ll leave you with some boring philosophy. No one, not a single scientist, knows for certain what the next big thing is going to be. No one knows when or where the next technological revolution is going to be, no one knows if the next world-changing invention is going to be made in a million dollar Elon Musk laboratory, or at 3am by a hungover student in their dorm room. So just know that when you read a blog post like this, about an 11 week undergrad project, even it has the chance to be something big; not all scientific breakthroughs are made by bearded dudes in lab coats, they could be made by you.