-

EducationG’day mate, how are ya? Thank you for waiting for the updates on my research on Human EEG Decoding (aka Mind Reading). Since my last post, I have tried to decode human EEG data in real time and… guess what…I succeeded! Hooray! I first analyzed all the data I have collected so far to verify and evaluate the […]

EducationG’day mate, how are ya? Thank you for waiting for the updates on my research on Human EEG Decoding (aka Mind Reading). Since my last post, I have tried to decode human EEG data in real time and… guess what…I succeeded! Hooray! I first analyzed all the data I have collected so far to verify and evaluate the […] -

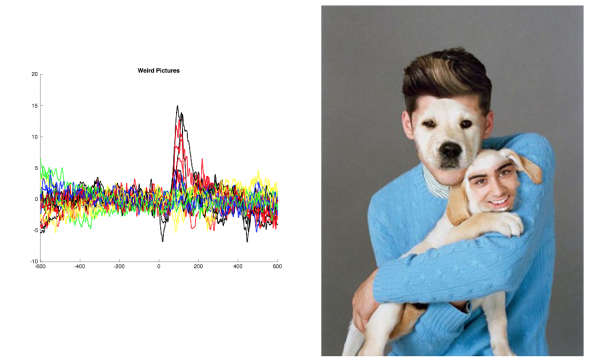

EducationG’day again! I’ve got data… and it is beautiful! More on this below… I am pleased to update my progress on my BYB project, Human EEG visual decoding! If you missed it, here’s the post where I introduced my project! Since my first blog post, I have collected the data from 6 subjects with the stimulus presentation program […]

EducationG’day again! I’ve got data… and it is beautiful! More on this below… I am pleased to update my progress on my BYB project, Human EEG visual decoding! If you missed it, here’s the post where I introduced my project! Since my first blog post, I have collected the data from 6 subjects with the stimulus presentation program […] -

EducationWelcome! This is Kylie Smith, a Michigan State University undergraduate writing to you from a basement in Ann Arbor. I am studying behavioral neuroscience and cognition at MSU and have been fortunate enough to have landed an internship with the one and only Backyard Brains for the summer. I am working on The Consciousness Detector […]

EducationWelcome! This is Kylie Smith, a Michigan State University undergraduate writing to you from a basement in Ann Arbor. I am studying behavioral neuroscience and cognition at MSU and have been fortunate enough to have landed an internship with the one and only Backyard Brains for the summer. I am working on The Consciousness Detector […]