-

EducationStart the presses! Backyard Brains has a new publication! Our Neurorobot paper is titled “Neurorobotics Workshop for High School Students Promotes Competence and Confidence in Computational Neuroscience.” You can read the article in its entirety on the Frontiers in Neurorobotics website–because we believe neuroscience knowledge is for everyone, and no one should have to pay […]

EducationStart the presses! Backyard Brains has a new publication! Our Neurorobot paper is titled “Neurorobotics Workshop for High School Students Promotes Competence and Confidence in Computational Neuroscience.” You can read the article in its entirety on the Frontiers in Neurorobotics website–because we believe neuroscience knowledge is for everyone, and no one should have to pay […] -

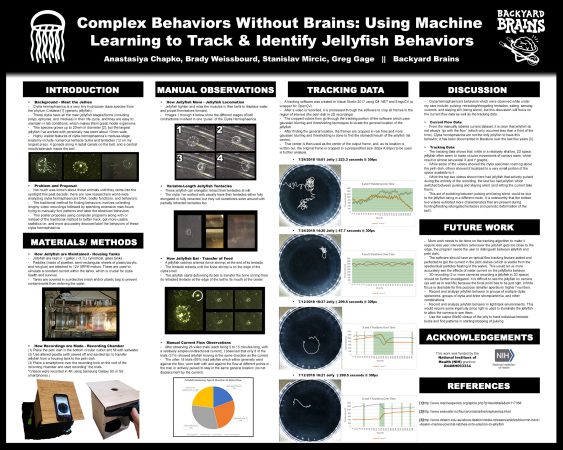

EducationHello all! The summer fellowship is officially over, but it’s not quite the end of the line for the jellies and me! In this final(?) update to my blog series I’ll be recalling the findings I’ve made over this summer, showcasing the poster I presented at the UROP Symposium, sharing my road trip back home […]

EducationHello all! The summer fellowship is officially over, but it’s not quite the end of the line for the jellies and me! In this final(?) update to my blog series I’ll be recalling the findings I’ve made over this summer, showcasing the poster I presented at the UROP Symposium, sharing my road trip back home […] -

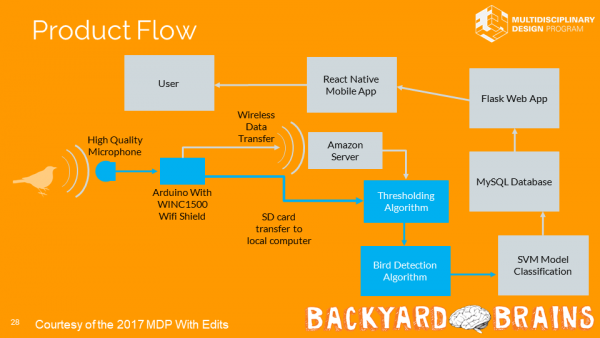

FellowshipHello friends, this is Yifan again. As the end of the summer draws near, my summer research is also coming to a conclusion. The work I did over the summer was very different from what I expected. Since this is a wrap up post for an ongoing project, let us first go through what exactly […]

FellowshipHello friends, this is Yifan again. As the end of the summer draws near, my summer research is also coming to a conclusion. The work I did over the summer was very different from what I expected. Since this is a wrap up post for an ongoing project, let us first go through what exactly […] -

FellowshipHello again everyone! It’s Yifan here with the songbird project. Like my other colleagues I also attended the 4th of July parade in Ann Arbor, which was very fun. I made a very rugged cardinal helmet which looks like a rooster hat, but I guess rooster also counts as a kind of bird, so that […]

FellowshipHello again everyone! It’s Yifan here with the songbird project. Like my other colleagues I also attended the 4th of July parade in Ann Arbor, which was very fun. I made a very rugged cardinal helmet which looks like a rooster hat, but I guess rooster also counts as a kind of bird, so that […] -

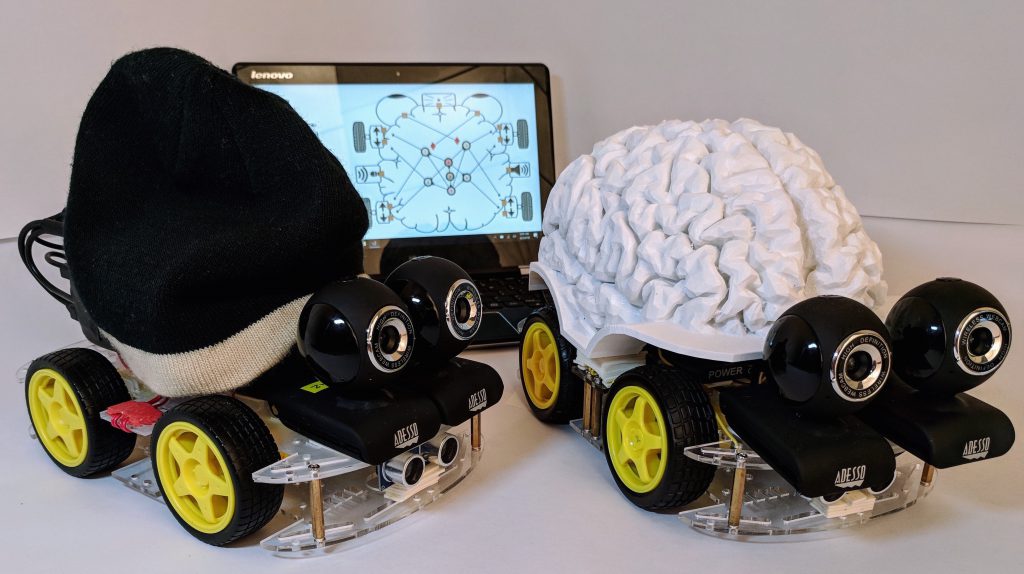

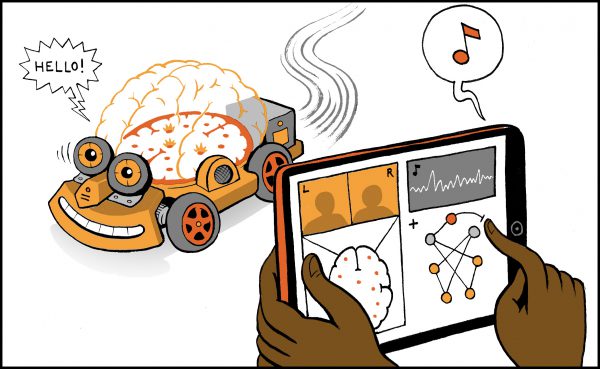

EducationCan robots think and feel? Can they have minds? Can they learn to be more like us? To do any of this, robots need brains. Scientists use “neurorobots” – robots with computer models of biological brains – to understand everything from motor control, and navigation to learning and problem solving. At Backyard Brains, we […]

EducationCan robots think and feel? Can they have minds? Can they learn to be more like us? To do any of this, robots need brains. Scientists use “neurorobots” – robots with computer models of biological brains – to understand everything from motor control, and navigation to learning and problem solving. At Backyard Brains, we […] -

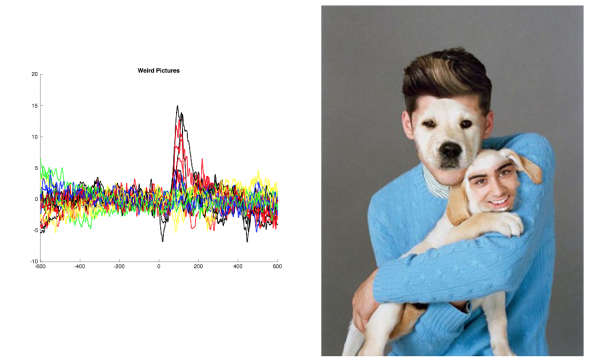

EducationG’day again! I’ve got data… and it is beautiful! More on this below… I am pleased to update my progress on my BYB project, Human EEG visual decoding! If you missed it, here’s the post where I introduced my project! Since my first blog post, I have collected the data from 6 subjects with the stimulus presentation program […]

EducationG’day again! I’ve got data… and it is beautiful! More on this below… I am pleased to update my progress on my BYB project, Human EEG visual decoding! If you missed it, here’s the post where I introduced my project! Since my first blog post, I have collected the data from 6 subjects with the stimulus presentation program […]