-

FellowshipWhat follows is a tale of customs adventures, personal legal status in a foreign country, and the history of Serbia and Yugoslavia. We have had the grace to work in Serba a number of times over the past few years, and 2024 was no different. During July, we were invited by the Center for the Promotion […]

FellowshipWhat follows is a tale of customs adventures, personal legal status in a foreign country, and the history of Serbia and Yugoslavia. We have had the grace to work in Serba a number of times over the past few years, and 2024 was no different. During July, we were invited by the Center for the Promotion […] -

FellowshipThis summer, we’ve beat our own record: in just under two weeks, 20 high-schoolers created nine projects using what is shaping up to be our most creative neuroscience kit ever! The tool (or should we say meta-tool?) called Neuro:Bit lets you build brain-machine interfaces (BMIs) that work using your body’s electrical signals. Depending on the project, […]

FellowshipThis summer, we’ve beat our own record: in just under two weeks, 20 high-schoolers created nine projects using what is shaping up to be our most creative neuroscience kit ever! The tool (or should we say meta-tool?) called Neuro:Bit lets you build brain-machine interfaces (BMIs) that work using your body’s electrical signals. Depending on the project, […] -

ExperimentMove aside, air guitars! Thanks to one of our latest projects, it is now possible to air-conduct music so that it actually changes in tempo and volume as you move your arms. This so-called neuro:baton is just one of 12 cool projects being developed on our 2024 Summer Research Fellowship that’s firing up as we speak. […]

ExperimentMove aside, air guitars! Thanks to one of our latest projects, it is now possible to air-conduct music so that it actually changes in tempo and volume as you move your arms. This so-called neuro:baton is just one of 12 cool projects being developed on our 2024 Summer Research Fellowship that’s firing up as we speak. […] -

FellowshipOver a dozen busy bees, 5 research projects, 4 hot weeks of July, countless data, iterations and coffee cups, one book of experiments to soak it all up and present to the wider audience — and the Backyard Brains 2023 US-Serbian Summer Research Fellowship rounds off. The result will hit the shelves this fall, with […]

FellowshipOver a dozen busy bees, 5 research projects, 4 hot weeks of July, countless data, iterations and coffee cups, one book of experiments to soak it all up and present to the wider audience — and the Backyard Brains 2023 US-Serbian Summer Research Fellowship rounds off. The result will hit the shelves this fall, with […] -

Fellowship— Written by Petar Damjanovic — If I were to show you a photo of a Rolex watch and a face of an unknown person, you’d probably be more interested in the prestigious, shiny object than the random stranger, right? However — and this may come as a surprise — your brain is much more modest […]

Fellowship— Written by Petar Damjanovic — If I were to show you a photo of a Rolex watch and a face of an unknown person, you’d probably be more interested in the prestigious, shiny object than the random stranger, right? However — and this may come as a surprise — your brain is much more modest […] -

Fellowship— Written by Milica Manojlovic — Aaaand the results are in! So, just a quick recap – we were hoping to get as much data on the way we process the Pinocchio illusion by measuring different behavioral outcomes as well as the EMG in three timepoints. As far as the behavioral measures are concerned, we questioned participants […]

Fellowship— Written by Milica Manojlovic — Aaaand the results are in! So, just a quick recap – we were hoping to get as much data on the way we process the Pinocchio illusion by measuring different behavioral outcomes as well as the EMG in three timepoints. As far as the behavioral measures are concerned, we questioned participants […] -

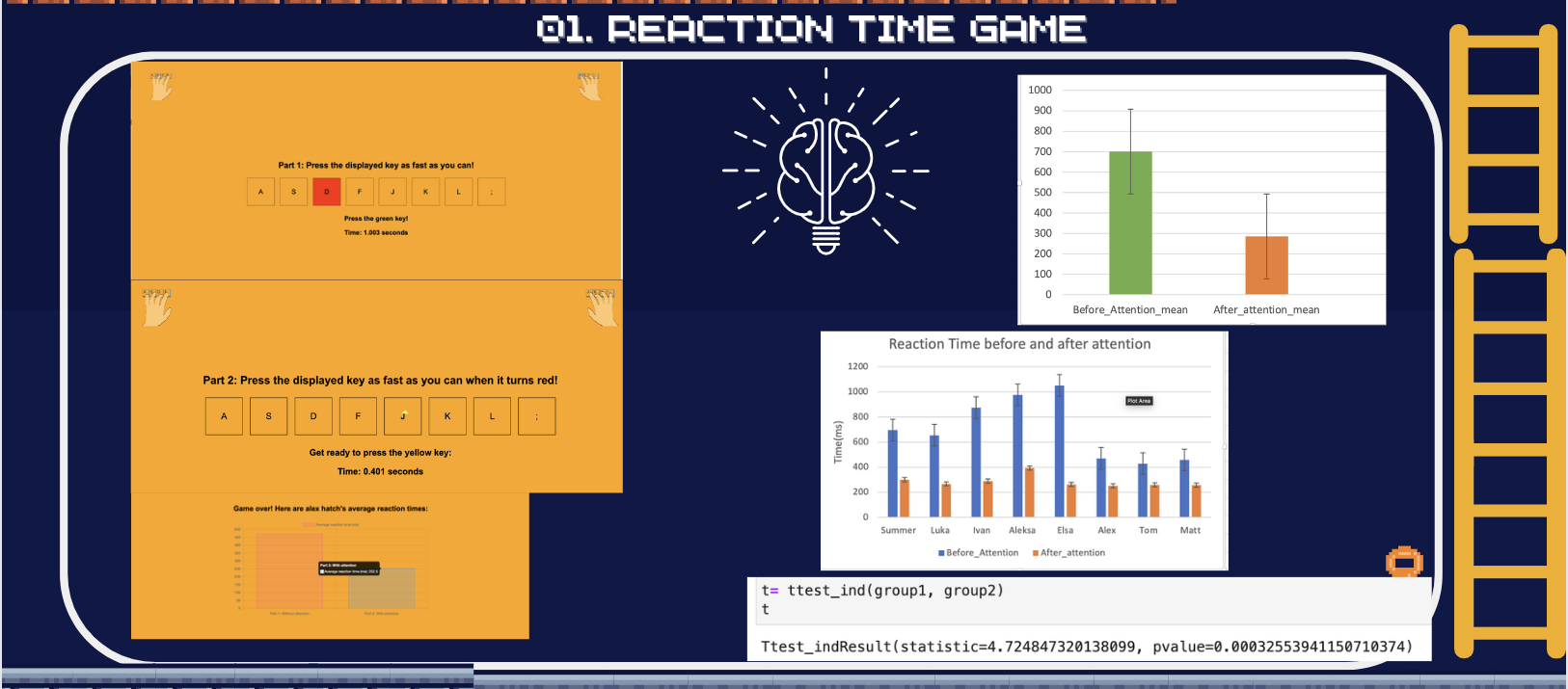

Fellowship— Written by Summer Eunhyung Ann — When we think about science and technology, we often think about something intricate and sophisticated to comprehend, such as genetic engineering or aerospace astrophysical technology. However, science and technology are a pivotal part of our mundane life. From us turning off the lights and going to bed at night to […]

Fellowship— Written by Summer Eunhyung Ann — When we think about science and technology, we often think about something intricate and sophisticated to comprehend, such as genetic engineering or aerospace astrophysical technology. However, science and technology are a pivotal part of our mundane life. From us turning off the lights and going to bed at night to […] -

Experiment— Written by Amanda Putti & Milica Milosevic — Well we made it! We’re at the final week of the BYB Fellowship! We faced many challenges throughout this project and had to pivot in order to get results, but we are happy where it ended. To give updates on our progress, let’s first start where we left […]

Experiment— Written by Amanda Putti & Milica Milosevic — Well we made it! We’re at the final week of the BYB Fellowship! We faced many challenges throughout this project and had to pivot in order to get results, but we are happy where it ended. To give updates on our progress, let’s first start where we left […] -

Fellowship— Written by Luka Caric, Elsa Fedrigolli & Tom DesRosiers — Prepare yourselves for another round of mushroom-tastic journey as we delve into the captivating world of electrical potentials in mushrooms. Join us as we unfold the shocking truths, sprinkle in some mushroom humor, and discover the electrifying mysteries hidden within these fantastic fungi! In our quest […]

Fellowship— Written by Luka Caric, Elsa Fedrigolli & Tom DesRosiers — Prepare yourselves for another round of mushroom-tastic journey as we delve into the captivating world of electrical potentials in mushrooms. Join us as we unfold the shocking truths, sprinkle in some mushroom humor, and discover the electrifying mysteries hidden within these fantastic fungi! In our quest […] -

Experiment— Written by Milica Manojlovic — We are all familiar with the Pinocchio story, right? A wooden boy whose nose would grow every time he lied. What if I told you that, with the right equipment, you could feel like Pinocchio in a just few minutes? All you need is a massager (>50 Hz frequency and >1mm […]

Experiment— Written by Milica Manojlovic — We are all familiar with the Pinocchio story, right? A wooden boy whose nose would grow every time he lied. What if I told you that, with the right equipment, you could feel like Pinocchio in a just few minutes? All you need is a massager (>50 Hz frequency and >1mm […] -

Fellowship— Written by Summer Eunhyung Ann — I am Summer, a Computer Science and Neuroscience undergraduate at the University of Michigan, a part-time artist, nerd, researcher at Michigan Medicine, and an intern at Backyard Brains for the summer. My plan is to create a do-it-yourself (DIY) eye tracker to investigate the hypothesis that humans, having evolved to […]

Fellowship— Written by Summer Eunhyung Ann — I am Summer, a Computer Science and Neuroscience undergraduate at the University of Michigan, a part-time artist, nerd, researcher at Michigan Medicine, and an intern at Backyard Brains for the summer. My plan is to create a do-it-yourself (DIY) eye tracker to investigate the hypothesis that humans, having evolved to […] -

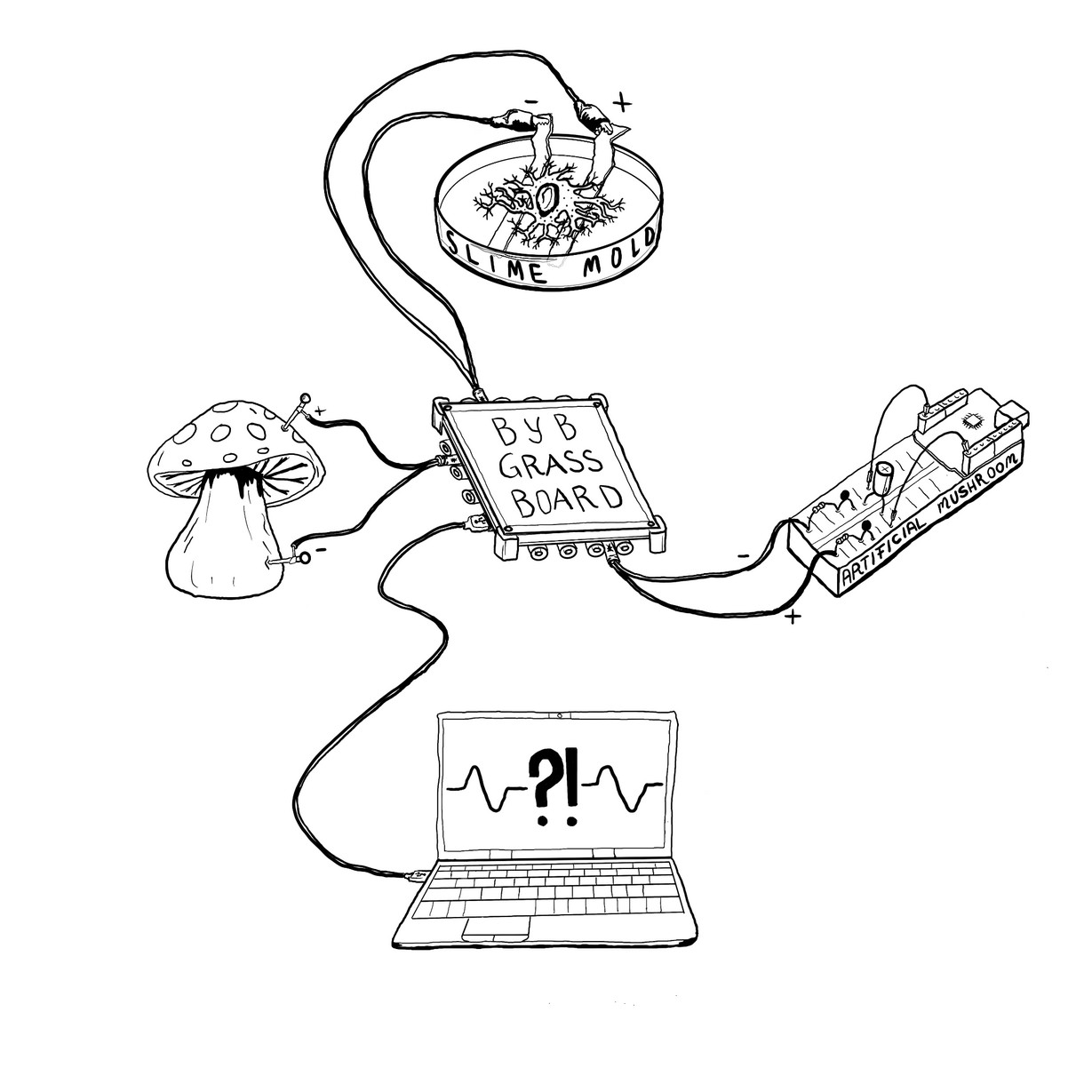

Fellowship— Written by Milica Milosevic & Amanda Putti — Considering the slime craze of 2016, we’re pretty sure we have all played with slime – or at least know what it is. Have you ever heard of slime molds though? Slime molds, scientifically referred to as Physarum polycephalum, are a type of single-celled protist that is […]

Fellowship— Written by Milica Milosevic & Amanda Putti — Considering the slime craze of 2016, we’re pretty sure we have all played with slime – or at least know what it is. Have you ever heard of slime molds though? Slime molds, scientifically referred to as Physarum polycephalum, are a type of single-celled protist that is […]