-

EducationOver 11 sunny Ann Arbor weeks, our research fellows worked hard to answer their research questions. They developed novel methodologies, programmed complex computer vision and data processing systems, and compiled their experimental data for poster, and perhaps even journal, publication. But, alas and alack… all good things must come to an end. Fortunately, in research, […]

EducationOver 11 sunny Ann Arbor weeks, our research fellows worked hard to answer their research questions. They developed novel methodologies, programmed complex computer vision and data processing systems, and compiled their experimental data for poster, and perhaps even journal, publication. But, alas and alack… all good things must come to an end. Fortunately, in research, […] -

EducationToday our Summer Research Fellows “snuck in” and presented their summer work at a University of Michigan, Undergraduate Research Opportunity Program (UROP) symposium! Over the two sessions our fellows presented their work and rigs to judges, other students, to university faculty, and community members. Some of the fellows are seasoned poster designers, but others had […]

EducationToday our Summer Research Fellows “snuck in” and presented their summer work at a University of Michigan, Undergraduate Research Opportunity Program (UROP) symposium! Over the two sessions our fellows presented their work and rigs to judges, other students, to university faculty, and community members. Some of the fellows are seasoned poster designers, but others had […] -

EducationG’day mate, how are ya? Thank you for waiting for the updates on my research on Human EEG Decoding (aka Mind Reading). Since my last post, I have tried to decode human EEG data in real time and… guess what…I succeeded! Hooray! I first analyzed all the data I have collected so far to verify and evaluate the […]

EducationG’day mate, how are ya? Thank you for waiting for the updates on my research on Human EEG Decoding (aka Mind Reading). Since my last post, I have tried to decode human EEG data in real time and… guess what…I succeeded! Hooray! I first analyzed all the data I have collected so far to verify and evaluate the […] -

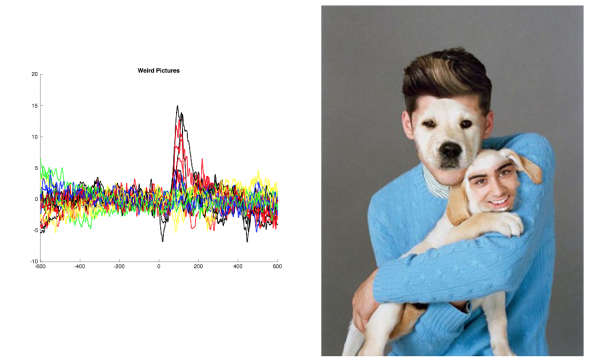

EducationG’day again! I’ve got data… and it is beautiful! More on this below… I am pleased to update my progress on my BYB project, Human EEG visual decoding! If you missed it, here’s the post where I introduced my project! Since my first blog post, I have collected the data from 6 subjects with the stimulus presentation program […]

EducationG’day again! I’ve got data… and it is beautiful! More on this below… I am pleased to update my progress on my BYB project, Human EEG visual decoding! If you missed it, here’s the post where I introduced my project! Since my first blog post, I have collected the data from 6 subjects with the stimulus presentation program […] -

EducationI can see that you’re seeing a weird picture! :0 G’day, I am Nathan. I am a senior majoring in mathematical science and psychology in the State University of New York at Binghamton. Before continuing my study in the US, I worked for six years in IT and Finance after studying management and economics in […]

EducationI can see that you’re seeing a weird picture! :0 G’day, I am Nathan. I am a senior majoring in mathematical science and psychology in the State University of New York at Binghamton. Before continuing my study in the US, I worked for six years in IT and Finance after studying management and economics in […] -

EducationIt’s early on a warm Ann Arbor morning and the office is buzzing with excitement! Our Summer 2017 research fellows are here! Today, our fellows are getting to know the staff and space at Backyard Brains, but more importantly, they’re planning, because for the next ten weeks they will be working on neuroscience and engineering […]

EducationIt’s early on a warm Ann Arbor morning and the office is buzzing with excitement! Our Summer 2017 research fellows are here! Today, our fellows are getting to know the staff and space at Backyard Brains, but more importantly, they’re planning, because for the next ten weeks they will be working on neuroscience and engineering […]